Interaction-aware behavior planning for autonomous vehicles validated with real traffic data

Abstract

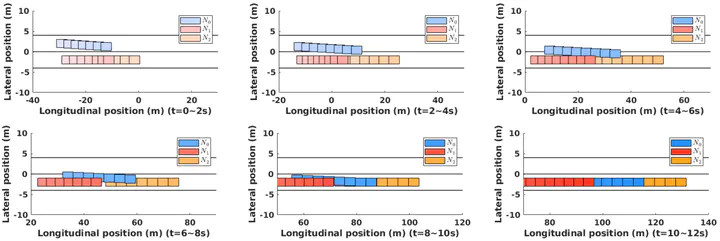

Autonomous vehicles (AVs) need to interact with other traffic participants who can be either cooperative or aggressive, attentive or inattentive. Such different characteristics can lead to quite different interactive behaviors. Hence, to achieve safe and efficient autonomous driving, AVs need to be aware of such uncertainties when they plan their own behaviors. In this paper, we formulate such a behavior planning problem as a partially observable Markov Decision Process (POMDP) where the cooperativeness of other traffic participants is treated as an unobservable state. Under different cooperativeness levels, we learn the human behavior models from real traffic data via the principle of maximum likelihood. Based on that, the POMDP problem is solved by Monte-Carlo Tree Search. We verify the proposed algorithm in both simulations and real traffic data on a lane change scenario, and the results show that the proposed algorithm can successfully finish the lane changes without collisions.